reader comments 51

reader comments 51 Kremlin-backed actors have stepped up efforts to interfere with the US presidential election by planting disinformation and false narratives on social media and fake news sites, analysts with Microsoft reported Wednesday.

The analysts have identified several unique influence-peddling groups affiliated with the Russian government seeking to influence the election outcome, with the objective in large part to reduce US support of Ukraine and sow domestic infighting. These groups have so far been less active during the current election cycle than they were during previous ones, likely because of a less contested primary season.

Stoking divisions

Over the past 45 days, the groups have seeded a growing number of social media posts and fake news articles that attempt to foment opposition to US support of Ukraine and stoke divisions over hot-button issues such as election fraud. The influence campaigns also promote questions about President Biden’s mental health and corrupt judges. In all, Microsoft has tracked scores of such operations in recent weeks.

In a report published Wednesday, the Microsoft analysts wrote:

The deteriorated geopolitical relationship between the United States and Russia leaves the Kremlin with little to lose and much to gain by targeting the US 2024 presidential election. In doing so, Kremlin-backed actors attempt to influence American policy regarding the war in Ukraine, reduce social and political support to NATO, and ensnare the United States in domestic infighting to distract from the world stage. Russia’s efforts thus far in 2024 are not novel, but rather a continuation of a decade-long strategy to “win through the force of politics, rather than the politics of force,” or active measures. Messaging regarding Ukraine—via traditional media and social media—picked up steam over the last two months with a mix of covert and overt campaigns from at least 70 Russia-affiliated activity sets we track.

The most prolific of the influence-peddling groups, Microsoft said, is tied to the Russian Presidential Administration, which according to the Marshal Center think tank, is a secretive institution that acts as the main gatekeeper for President Vladimir Putin. The affiliation highlights the “the increasingly centralized nature of Russian influence campaigns,” a departure from campaigns in previous years that primarily relied on intelligence services and a group known as the Internet Research Agency.

Advertisement“Each Russian actor has shown the capability and willingness to target English-speaking—and in some cases Spanish-speaking—audiences in the US, pushing social and political disinformation meant to portray Ukrainian President Volodymyr Zelensky as unethical and incompetent, Ukraine as a puppet or failed state, and any American aid to Ukraine as directly supporting a corrupt and conspiratorial regime,” the analysts wrote.

An example is Storm-1516, the name Microsoft uses to track a group seeding anti-Ukraine narratives through US Internet and media sources. Content, published in English, Russian, French, Arabic, and Finnish, frequently originates through disinformation seeded by a purported whistleblower or citizen journalist over a purpose-built video channel and then picked up by a network of Storm-1516-controlled websites posing as independent news sources. These fake news sites reside in the Middle East and Africa as well as in the US, with DC Weekly, Miami Chronicle, and the Intel Drop among them.

Eventually, once the disinformation has circulated in subsequent days, US audiences begin amplifying it, in many cases without being aware of the original source. The following graphic illustrates the flow.

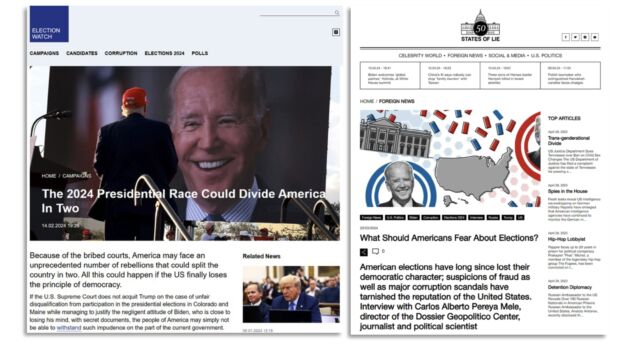

Wednesday’s report also referred to another group tracked as Storm-1099, which is best known for a campaign called Doppelganger. According to the disinformation research group Disinfo Research Lab, the campaign has targeted multiple countries since 2022 with content designed to undermine support for Ukraine and sow divisions among audiences. Two US outlets tied to Storm-1099 are Election Watch and 50 States of Lie, Microsoft said. The image below shows content recently published by the outlets:

Wednesday’s report also touched on two other Kremlin-tied operations. One attempts to revive a campaign perpetuated by NABU Leaks, a website that published content alleging then-Vice President Joe Biden colluded with former Ukrainian leader Petro Poroshenko, according to Reuters. In January, Andrei Derkoch—the ex-Ukrainian Parliamentarian and US-sanctioned Russian agent responsible for NABU Leaks—reemerged on social media for the first time in two years. In an interview, Derkoch propagated both old and new claims about Biden and other US political figures.

The other operation follows a playbook known as hack and leak, in which operatives obtain private information through hacking and leak it to news outlets.

China, Iran, and AI

Microsoft also noted that the governments of China and Iran are also actively aiming to influence the outcome of the November elections, although not to the extent the Russian government is.

“Like Russia, China’s election-focused malign influence activity uses a multi-tiered strategy that aims to destabilize targeted countries by exploiting increasing polarization among the public and undermining faith in centuries-old democratic systems,” the analysts wrote. “Tactically, China’s influence operations seek to achieve these goals by capitalizing on existing sociopolitical divides, investing in target-country media outlets, leveraging cyber resources, and aligning its attacks with partisan interests to encourage organic circulation.”

As an example, social media posts by sock puppet agents of the Chinese Communist Party promoted former President Donald Trump and criticized Biden.

So far, predictions from Microsoft and others that convincing deepfake videos would be used to sow mass deception haven’t been borne out. In most cases, the audiences have been able to detect and debunk deepfakes before they had any influence. The analysts went on to write:

Instead, most of the incidents where we’ve observed audiences gravitate toward and share disinformation involve simple digital forgeries consistent with what influence actors over the last decade have regularly employed. For example, fake news stories with spoofed media logos embossed on them—a typical tactic of Russia-affiliated actors—garner several times more views and shares than any fully synthetic generative AI video we’ve observed and assessed.

The scenarios in which AI-generated or AI-enhanced content travels across social media at scale have considerable nuance. The following set of factors and conditions inform our team’s assessment of generative AI risks as we head into a series of elections in 2024.

- AI-enhanced, rather than AI-generated: Fully synthetic deepfake videos of Russian President Vladimir Putin or Ukrainian President Volodymyr Zelensky are relatively routine in today’s social media landscape. These videos, while using quite sophisticated technology, are still not convincing and often rapidly debunked because the entirety of the video or near-entirety of the video is fabricated. Campaigns that mix both real and AI-generated content are more effective—a touch of AI-generated audio overlayed onto authentic video or integrating a piece of AI-generated content within a larger body of authentically produced content, for example—have been more convincing to audiences. These campaigns are also cheaper and simpler to create.

- Audio more impactful than video: Public concerns of generative AI employment have focused on the video medium, and particularly deepfake videos, but audio manipulations have consistently been more impactful on audience perceptions. Fake audio allegedly of politician Michal Šimečka and journalist Monika Tódová during the Slovak presidential election cycle is just one example of more convincing audio content. Training data for generative AI audio is often more available for more people, requires less resourcing to create believable voices, less processing power, and remains harder to debunk without the context clues that AI-generated video can provide.

- Private setting over public setting: AI-generated audio has been more impactful in large part because of the setting in which audiences encounter it. Deepfake videos of world leaders have quickly been refuted by audiences who recognize oddities in the video or footage from the past. Collectively, crowds do well in sniffing out fakes on social media. Individuals independently assessing the veracity of media, however, are less capable. In private settings—during a phone call or on a direct-messaging application—inauthentic content can be difficult to assess, with no alternative opinions or subject-matter expertise by which to verify the authenticity of content.

- Times of crisis and breaking news: Soviet-era psychological warfare and today’s Russian campaigns seize on calamitous messaging to push disinformation. Information consumers are more susceptible to deceptive content when scared or during fast-breaking events when the veracity of reported information may not yet be clear. Last summer, CCP-linked social media accounts published AI-generated images during the Maui wildfires in a coordinated disinformation campaign, as one example. Those Advanced Persistent Manipulators (APMs) staffed with personnel equipped with generative AI tools will be well-positioned to deceive audiences headed into elections.

- Lesser-known impersonations rather than well-known impersonations: Audiences appear better at detecting manipulated content about individuals with whom they are more familiar. US voters have likely seen hundreds or even thousands of videos of 2024’s candidates and thus will be more adept at identifying oddities in the inauthentic content. However, generative AI content regarding individuals or situations with whom an audience is less familiar—local election workers for example—or in languages or regions where an audience has less an understanding may be more impactful and pose a bigger risk to elections in the coming months.

In the months to come, the analysts said, Russia, China, and Iran are likely to increase the pace of influence and disruption campaigns.