reader comments 82

reader comments 82 On Thursday, renowned AI researcher Andrej Karpathy, formerly of OpenAI and Tesla, tweeted a lighthearted proposal that large language models (LLMs) like the one that runs ChatGPT could one day be modified to operate in or be transmitted to space, potentially to communicate with extraterrestrial life. He said the idea was "just for fun," but with his influential profile in the field, the idea may inspire others in the future.

Karpathy's bona fides in AI almost speak for themselves, receiving a PhD from Stanford under computer scientist Dr. Fei-Fei Li in 2015. He then became one of the founding members of OpenAI as a research scientist, then served as senior director of AI at Tesla between 2017 and 2022. In 2023, Karpathy rejoined OpenAI for a year, leaving this past February. He's posted several highly regarded tutorials covering AI concepts on YouTube, and whenever he talks about AI, people listen.

Most recently, Karpathy has been working on a project called "llm.c" that implements the training process for OpenAI's 2019 GPT-2 LLM in pure C, dramatically speeding up the process and demonstrating that working with LLMs doesn't necessarily require complex development environments. The project's streamlined approach and concise codebase sparked Karpathy's imagination.

"My library llm.c is written in pure C, a very well-known, low-level systems language where you have direct control over the program," Karpathy told Ars. "This is in contrast to typical deep learning libraries for training these models, which are written in large, complex code bases. So it is an advantage of llm.c that it is very small and simple, and hence much easier to certify as Space-safe."

AdvertisementOur AI ambassador

In his playful thought experiment (titled "Clearly LLMs must one day run in Space"), Karpathy suggested a two-step plan where, initially, the code for LLMs would be adapted to meet rigorous safety standards, akin to "The Power of 10 Rules" adopted by NASA for space-bound software.

This first part he deemed serious: "We harden llm.c to pass the NASA code standards and style guides, certifying that the code is super safe, safe enough to run in Space," he wrote in his X post. "LLM training/inference in principle should be super safe - it is just one fixed array of floats, and a single, bounded, well-defined loop of dynamics over it. There is no need for memory to grow or shrink in undefined ways, for recursion, or anything like that."

That's important because when software is sent into space, it must operate under strict safety and reliability standards. Karpathy suggests that his code, llm.c, likely meets these requirements because it is designed with simplicity and predictability at its core.

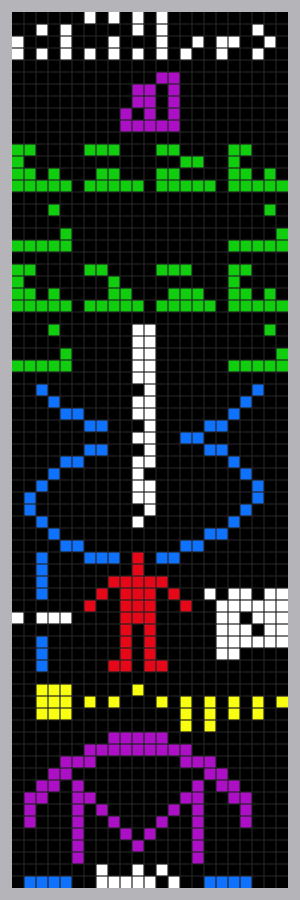

In step 2, once this LLM was deemed safe for space conditions, it could theoretically be used as our AI ambassador in space, similar to historic initiatives like the Arecibo message (a radio message sent from Earth to the Messier 13 globular cluster in 1974) and Voyager's Golden Record (two identical gold records sent on the two Voyager spacecraft in 1977). The idea is to package the "weights" of an LLM—essentially the model's learned parameters—into a binary file that could then "wake up" and interact with any potential alien technology that might decipher it.

"I envision it as a sci-fi possibility and something interesting to think about," he told Ars. "The idea that it is not us that might travel to stars but our AI representatives. Or that the same could be true of other species."

Karpathy isn't the first person to suggest sending LLMs into space. In December 2022, not long after the launch of ChatGPT, University of Vermont robotics professor Josh Bongard tweeted an idea for a sci-fi novel: "We send ChatGPT into space, with instructions for how ETs may query it to learn about humanity."

LLMs represent a highly compressed interactive library of human knowledge that could serve to answer almost any question aliens might have about human civilization (it might also confabulate information, making things up about us where there are gaps in knowledge, but the aliens won't know the difference).

In an October 2023 blog post, software developer Lee Mallon imagined sending an LLM into deep space. "Through this unique exchange, another civilization could get a taste of our culture, our kindness, our artistic expressions, and the leaps and bounds we've made in technology and understanding our world," he wrote. "They could listen to our poems, read our stories, and maybe even appreciate a good meme or pixel art, seeing the brighter sides of our human nature."

AdvertisementOf course, to run the space-bound LLM, the extraterrestrial civilization would need to possess advanced computational capabilities and an understanding of the format in which the model's weights are encoded. They'd need a compatible hardware architecture capable of processing the matrix operations involved in running the model, as well as a software framework that could interpret the binary file and initialize the LLM. But even if we don't provide instructions on how to build all of that, a smart enough species might be able to figure it out for themselves.

"Running it would, I think, be easy," Karpathy told us, "because the core instructions set could be made very small, e.g., even just a single NAND gate, which is all by itself universal. The bigger problem would be that they will get text as the output, for example in English, and would have to learn themselves how to interpret it or what it means, by interacting with the LLM over a long period of time. It's not obvious!"

Despite these challenges, if the extraterrestrial civilization were to decipher and run the LLM successfully, it could open up a new avenue for interstellar communication and knowledge exchange, assuming the model was trained on a range of human knowledge. But given known biases in AI training datasets, we can only imagine the fights that might break out over who gets to decide what goes into the LLM we hypothetically send into space.

But is it a good idea?

The idea of broadcasting or launching an LLM into space raises interesting questions about safety and ethics considerations. Sending messages to alien civilizations, often called active SETI (search for extraterrestrial intelligence), has been a controversial topic for some in the past.

Critics typically argue that deliberately broadcasting our presence and technological capabilities to unknown alien civilizations could potentially attract unwanted attention from hostile entities. That might put our planet or species at risk.

Karpathy himself is aware of the risks. "Oh, absolutely, major safety concerns. See the Three Body Problem," he told us, referring to the famous 2008 novel by Liu Cixin where an extraterrestrial civilization intercepts a message from Earth and subsequently launches an invasion.

Another risk, aside from a hostile takeover, is that the receiving aliens might also get the wrong idea about us from the LLM if it were trained on a broad spectrum of human culture.

In his blog post, Lee Mallon wrote, "As they conversate through the depths of our digital knowledge, they'd also stumble upon the darker chapters of our story. They'd learn about our knack for warfare, our greed, and the many destructive tools we've crafted. This could paint a scary picture, making us appear as potential threats. They might start to wonder if giving us a cosmic call is a good idea or a recipe for disaster or if we need to be removed from the chessboard altogether."

AdvertisementBut even if we trained the LLM solely on humanity's better attributes, it might not make the best interstellar diplomat due to technical drawbacks and first-contact delicacies.

"Goodness, the idea of LLMs as our representatives to other species is terrifying," said Ars Technica Senior Space Editor Eric Berger. "Is it a good idea? God, no. It seems that if you were to chat with an LLM long enough it would start to get hallucinogenic. Or like when Kevin Roose was asked to leave his wife. That sort of thing. Any interaction with a new species would probably be a very delicate thing, requiring an incredible amount of nuance."

Putting delicate nuance aside for the sake of the thought experiment, if LLMs are going to serve as our interstellar ambassadors, Karpathy jokes about the importance of sending highly polished code to represent humanity to the broader universe: "Maybe one day we'll ourselves find LLMs of aliens out there, instead of them directly," he tweeted. "Maybe the LLMs will find each other. We'd have to make sure the code is really good, otherwise that would be kind of embarrassing."