reader comments 18

reader comments 18 As the US moves toward criminalizing deepfakes—deceptive AI-generated audio, images, and videos that are increasingly hard to discern from authentic content online—tech companies have rushed to roll out tools to help everyone better detect AI content.

But efforts so far have been imperfect, and experts fear that social media platforms may not be ready to handle the ensuing AI chaos during major global elections in 2024—despite tech giants committing to making tools specifically to combat AI-fueled election disinformation. The best AI detection remains observant humans, who, by paying close attention to deepfakes, can pick up on flaws like AI-generated people with extra fingers or AI voices that speak without pausing for a breath.

Among the splashiest tools announced this week, OpenAI shared details today about a new AI image detection classifier that it claims can detect about 98 percent of AI outputs from its own sophisticated image generator, DALL-E 3. It also "currently flags approximately 5 to 10 percent of images generated by other AI models," OpenAI's blog said.

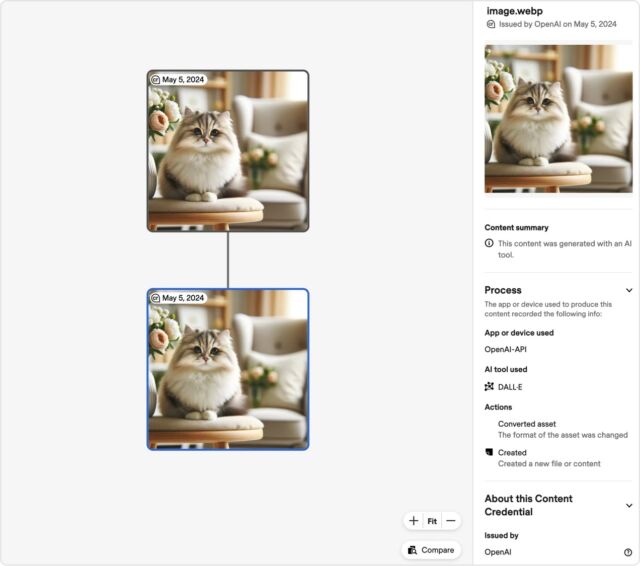

According to OpenAI, the classifier provides a binary "true/false" response "indicating the likelihood of the image being AI-generated by DALL·E 3." A screenshot of the tool shows how it can also be used to display a straightforward content summary confirming that "this content was generated with an AI tool," as well as includes fields ideally flagging the "app or device" and AI tool used.

To develop the tool, OpenAI spent months adding tamper-resistant metadata to "all images created and edited by DALL·E 3" that "can be used to prove the content comes" from "a particular source." The detector reads this metadata to accurately flag DALL-E 3 images as fake.

AdvertisementThat metadata follows "a widely used standard for digital content certification" set by the Coalition for Content Provenance and Authenticity (C2PA), often likened to a nutrition label. And reinforcing that standard has become "an important aspect" of OpenAI's approach to AI detection beyond DALL-E 3, OpenAI said. When OpenAI broadly launches its video generator Sora, C2PA metadata will be integrated into that tool as well, OpenAI said.

Of course, this solution is not comprehensive because that metadata could always be removed, and "people can still create deceptive content without this information (or can remove it)," OpenAI said, "but they cannot easily fake or alter this information, making it an important resource to build trust."

Because OpenAI is all in on C2PA, the AI leader announced today that it would join the C2PA steering committee to help drive broader adoption of the standard. OpenAI will also launch a $2 million fund with Microsoft to support broader "AI education and understanding," seemingly partly in the hopes that the more people understand about the importance of AI detection, the less likely they will be to remove this metadata.

"As adoption of the standard increases, this information can accompany content through its lifecycle of sharing, modification, and reuse," OpenAI said. "Over time, we believe this kind of metadata will be something people come to expect, filling a crucial gap in digital content authenticity practices."

OpenAI joining the committee "marks a significant milestone for the C2PA and will help advance the coalition’s mission to increase transparency around digital media as AI-generated content becomes more prevalent," C2PA said in a blog.

Recruiting the “first group of testers” of deepfake detector

Presumably, if all image and video generators adopted the C2PA standard, OpenAI's classifier could improve its accuracy in detecting content from other AI tools. But in the meantime, OpenAI is pushing forward with improving the tool by putting out a call for researchers to become the "first group of testers." Applications are due by July 31, and all researchers will be notified of acceptance by August 31.

"Our goal is to enable independent research that assesses the classifier's effectiveness, analyzes its real-world application, surfaces relevant considerations for such use, and explores the characteristics of AI-generated content," OpenAI's blog said.

OpenAI is particularly interested in recruiting researchers who can "stress test the classifier across diverse image types, such as medical images and photorealistic simulations," investigate biases, test whether the classifier itself can be manipulated by bad actors, and compare the tool's performance to human detection of AI.

The AI company also wants to connect with researchers interested in exploring "the prevalence and characteristics of AI-generated images in various online environments." Sandhini Agarwal, an OpenAI safety researcher, told The New York Times that, ultimately, OpenAI's plan is to "kick-start new research" that is "really needed."

Currently, the job of fact-checking fake AI images falls to social media users and platforms, but neither is seemingly achieving much progress in stopping the spread of disinformation.

AdvertisementSocial media platforms—perhaps most notably X (formerly Twitter)—have struggled to contain the spread of fake AI images featuring everyone from the Pope to Donald Trump. In March, the Center for Countering Digital Hate (CCDH) reported that mentions of AI in X's fact-checking system called Community Notes "increased at an average of 130 percent per month" between January 2023 and 2024.

This "indicates that disinformation featuring AI-generated images is rising sharply" on X, seemingly increasing the risk that "images that could support disinformation about candidates or claims of election fraud" may spread widely, the CCDH warned.

While OpenAI invests in research and public education to block AI images from harming elections, its attention will remain divided, trying to put out fires caused by its various AI tools. For example, OpenAI will simultaneously be "developing new provenance methods to enhance the integrity of digital content," the company said, including making "tamper-resistant watermarking" for AI-generated audio.

Notably, voice generators have already been used to sow confusion during 2024 elections, with robocall scammers mimicking President Joe Biden this January in an effort to urge Democrats not to vote.

Audio watermarking could help reduce confusion and has already been incorporated into OpenAI's Voice Engine, which OpenAI noted is currently only available in a limited research preview. But not every tech company views watermarking as the best solution to combat disinformation on that front. Some, like McAfee and Intel, recently announced that they are partnering to use advanced AI techniques to detect audio deepfakes—no watermarks required.

Trusting AI to detect AI manipulation

McAfee's Deepfake Detector is coming "soon" for English language detection to Intel-based AI PCs and represents "significant enhancements" to technology detecting AI-manipulated audio, McAfee announced in a blog. Previously referred to as "Project Mockingbird," the tool currently operates at more than 90 percent accuracy and is expected to be a "game-changer" when it's released to consumers who are increasingly concerned about AI voice manipulation, McAfee boasted.

McAfee's chief technology officer Steve Grobman told Ars why OpenAI's watermarks may not be enough.

"If you make something that's fake and want to make it easier to detect, watermarks are great," Grobman said, but "the problem is there's a large corpus of highly sophisticated tools that won't watermark," so watermarks are "not a comprehensive solution."

"If you're a cybercriminal trying to build a scam where you want to impersonate a celebrity, in order to hock a product or have an investment scam, you're not going to use one of these technologies that's going to watermark and make it much easier to detect," Grobman said. "You'll use some of the other tools that are readily available that won't watermark. That's the challenge with watermarking."

McAfee's technology is designed to help combat a rise in deepfake voice scams, as well as election disinformation, when bad actors use any AI tools. It leverages "transformer-based Deep Neural Network models" that McAfee said are "expertly trained to detect and notify customers when audio in a video is likely generated or manipulated by AI." It does this in the same way that a human listener might detect fake audio by detecting a range of signals, like unusual vocabulary or instances where audio has been clearly edited to stitch clips together.

Grobman demoed the deepfake detector for Ars. It wasn't perfect, but it effectively distinguished between AI-generated audio during a fake Biden-Trump interview and authentic Biden audio from an actual Oval Office address. The obviously fake interview was flagged as more than 90 percent likely to be AI-generated, while Biden's address was marked around 11 percent likely to be AI-generated.

The demo also showed how on AI PCs, McAfee's tool can detect AI deepfakes even more quickly than on a CPU without Intel's advanced AI technology. And Grobman said that because the technology runs locally, consumers can avoid both privacy risks and wasting time uploading potentially sensitive audio to the cloud just to detect AI audio. Instead, one day soon, users could press play on a video and keep watch on a status bar that indicates the likelihood that the audio may be AI-generated. The tool appears easy to use, but it still has some issues flagging false positives.

AdvertisementBecause of those false positives, Grobman told Ars that ahead of the Deepfake Detector's release, McAfee's focused on improvements. His team is currently running various tests to subject their models to detect fake audio in difficult situations, like when a bad actor layers a music track over an AI voice or a scam involves weaving authentic and fake audio together. His goal is for the detector to have "a low false positive rate" so that users are rarely confused by the tool flagging real videos mistakenly as fake.

"The real world of deepfakes is messy," Grobman told Ars. "Part of what we're doing research on is how do we provide guidance to consumers, even in those messier situations where it's not as crystal-clear as" audio being detected—as OpenAI does with its binary true/false image classifier—as either AI-generated or not AI-generated.

Callum Hood, the head of research at the CCDH, told TechCrunch that there is a "very real risk" that elections in the US and globally could be "undermined" by generative AI-fueled disinformation. Hood seemed particularly concerned about AI images being used as "photorealistic propaganda." In defense, platforms like TikTok, Instagram, and Facebook plan to label AI images wherever possible, while X will likely continue relying on community notes.

Like OpenAI, Grobman's team is also researching solutions to help consumers one day effectively detect fake images. Grobman told Ars that McAfee decided to prioritize detecting deepfake audio first, because they could potentially cover more ground since "not all fake video has fake video," but many fake videos rely on manipulated audio over legitimate videos.

However, ahead of this election season, Grobman said that McAfee is exploring the "messy" "real world" of fake images, attempting to detect subtle AI manipulations, like replacing a cellphone in a legitimate photo with a fake gun to change its meaning.

According to the CCDH, most of the fake AI images found spreading on X are generated by four of the most popular image generators: DALL-E 3, DreamStudio, Image Creator, and Midjourney.

OpenAI did not provide Ars with further comment on its image classifier, but a spokesperson told TechCrunch that OpenAI's plan is to "continue to adapt and learn from the use of our tools," hoping to "design mitigations," "prevent abuse," and "improve transparency" as "elections take place around the world."